Overview

The INVITE Institute seeks to establish a body of general AI techniques with empirical evidence for supporting noncognitive skill development in STEM learning contexts. Resulting INVITE systems will seek to transform computer-based learning experiences by incorporating advanced tools for tracking learner activities, modeling acquisition of noncognitive skills, collecting teacher input, and providing natural interaction with pedagogical agents. Research will be organized around 3 synergistic and interconnecting strands: Strand 1 will produce rich data for research in foundational and use-inspired AI and make that (anonymized) data publicly available. Strand 2 will focus on advanced learner modeling to capture noncognitive skill development, which will then be leveraged in Strand 3 to support novel interactions within socially aware STEM learning environments that both understand and engage with learners in meaningful ways.

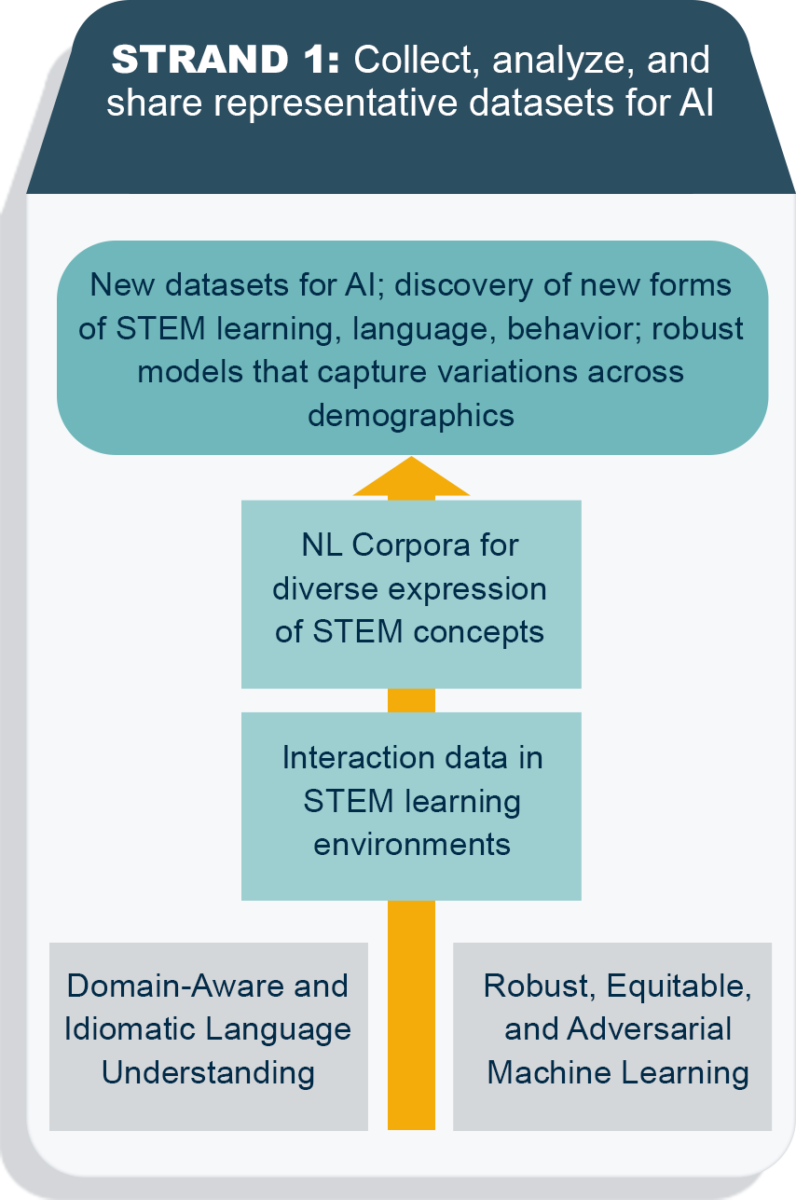

STRAND 1 – REPRESENTATIVE DATASETS

A lack of large-scale, shareable datasets for K-12 learning has proven to be a major obstacle to foundational AI advances that can lead to deeper, more nuanced support for learning across disciplines and contexts. Strand 1 research will be driven by collecting and curating such datasets that will enable foundational advances in AI areas including fair and robust machine learning (ML) and natural language understanding (NLU). Working with the INVITE network of school partners, the resulting data datasets will be collected and annotated with three principles in mind:

- Representativeness: reduce bias and lack of diversity in educational data sets to facilitate more robust and inclusive ML and NLU models.

- Consistency: ensure similar structure and organization of data across systems, grade bands, and disciplines.

- Shareability: ensure privacy of data sources through anonymization and provide supporting tools and examples for researcher use.

The INVITE Institute will undertake a three-pronged effort to: (1) define formats and requirements of representative datasets; (2) build an open-source community for collection and analysis of data via a cloud-based Open Live Data Lab to enable model sharing; and (3) convene meetings with other AI institutes and researchers across the U.S. to develop common guidelines and coordinated actions to grow the repository.

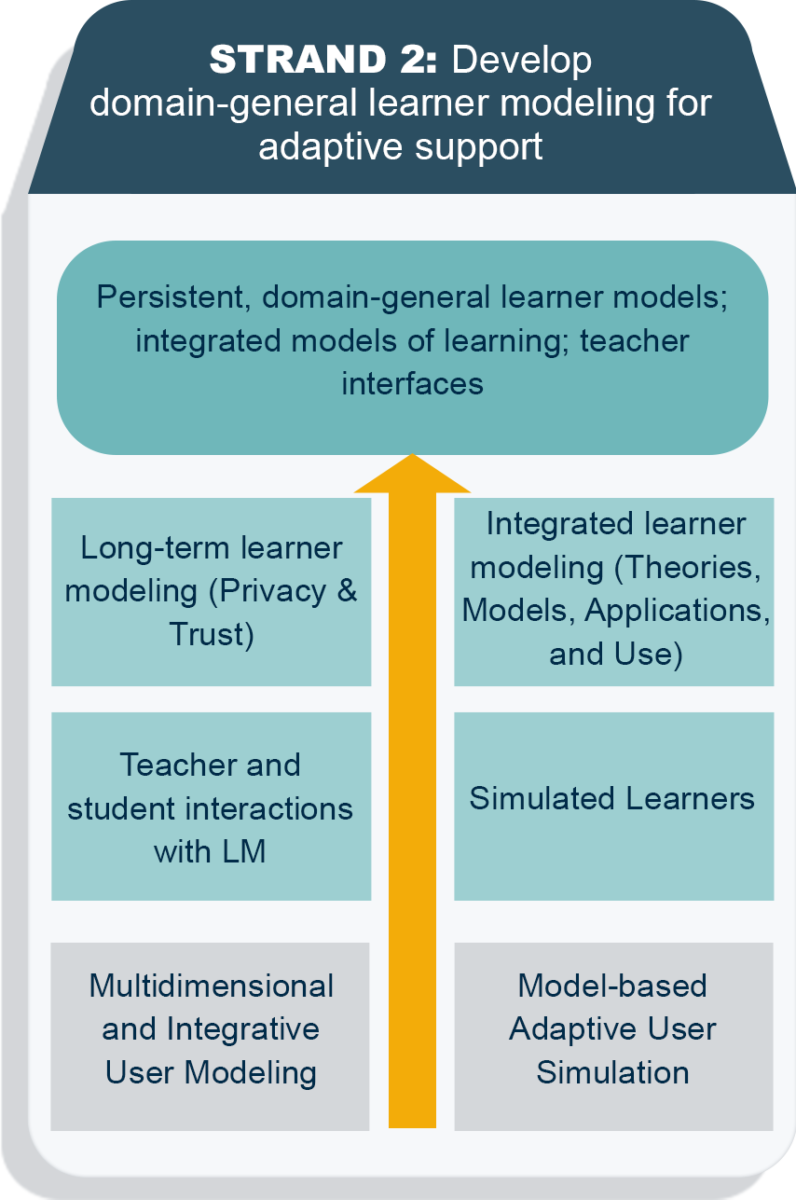

STRAND 2 – LEARNER MODELING

Lack of progress developing persistent and integrated learner models has limited the ability of AI-augmented learning technologies to support noncognitive skill development. INVITE research will produce novel approaches for assessing noncognitive, cognitive, emotional, and social factors. Foundational AI research will investigate novel NLP approaches and connections to cognitive architectures, interpretable generative models, and next generation simulated learners.

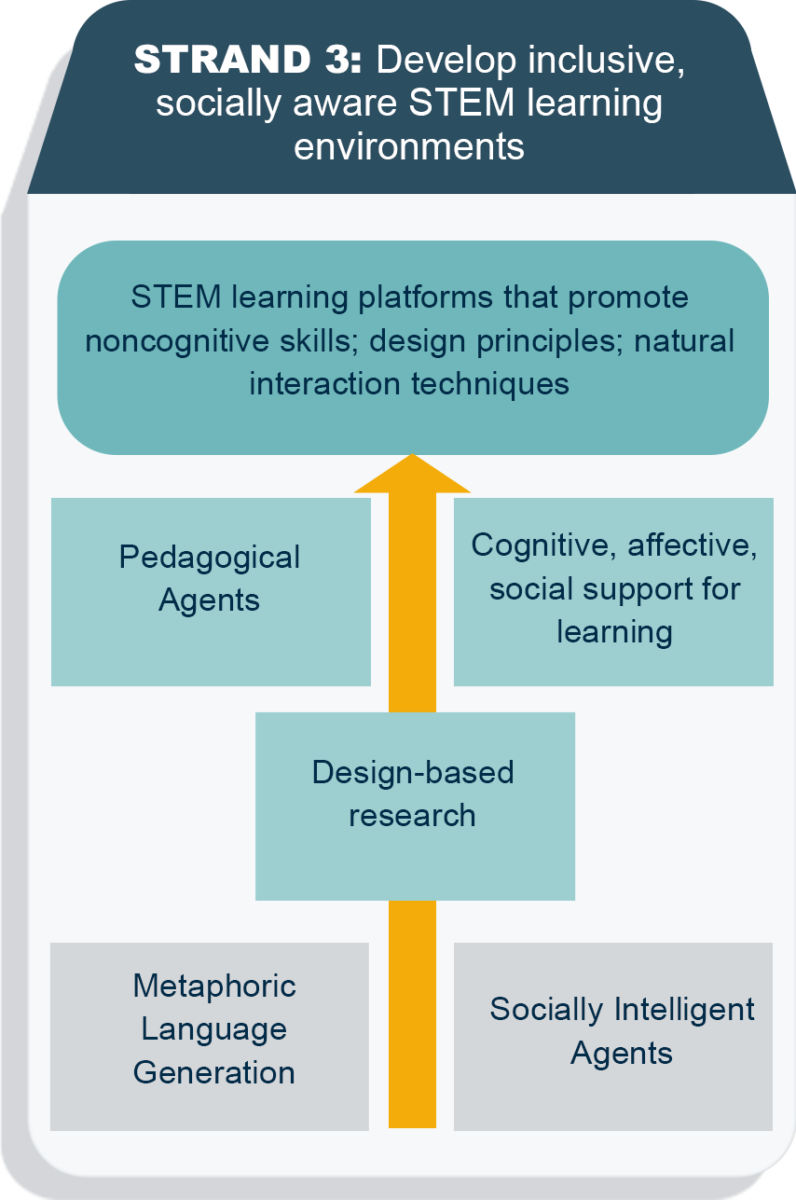

STRAND 3 – STEM LEARNING ENVIRONMENTS

Strand 3 will build on prior research of the INVITE team to advance intelligent support for noncognitive skill development in STEM learning environments. A large portion of research will focus on topics in computer science education and feed into the INVITE national alliance connecting teachers, technology, and learners. Natural interaction with pedagogical agents (PAs) will enable new computational pedagogical models for noncognitive skill development and promoting positive self-beliefs. Working with INVITE researchers, partner Balance Studios will develop a PA toolkit for researchers that will be deployed and shared with the research community. Design-based research will be applied to support theory-driven, evidence-based iterative development of INVITE systems. Foundational AI research will investigate robust and fair ML methods and capture new agent interaction models for noncognitive skill development.